Milestone-Proposal:The Development of RenderMan® for Photorealistic Graphics

To see comments, or add a comment to this discussion, click here.

Docket #:2022-09

This Proposal has been approved, and is now a Milestone

To the proposer’s knowledge, is this achievement subject to litigation? No

Is the achievement you are proposing more than 25 years old? Yes

Is the achievement you are proposing within IEEE’s designated fields as defined by IEEE Bylaw I-104.11, namely: Engineering, Computer Sciences and Information Technology, Physical Sciences, Biological and Medical Sciences, Mathematics, Technical Communications, Education, Management, and Law and Policy. Yes

Did the achievement provide a meaningful benefit for humanity? Yes

Was it of at least regional importance? Yes

Has an IEEE Organizational Unit agreed to pay for the milestone plaque(s)? Yes

Has the IEEE Section(s) in which the plaque(s) will be located agreed to arrange the dedication ceremony? Yes

Has the IEEE Section in which the milestone is located agreed to take responsibility for the plaque after it is dedicated? Yes

Has the owner of the site agreed to have it designated as an IEEE Milestone? Yes

Year or range of years in which the achievement occurred:

1981-1988

Title of the proposed milestone:

The Development of RenderMan® for Photorealistic Graphics, 1981-1988

Plaque citation summarizing the achievement and its significance; if personal name(s) are included, such name(s) must follow the achievement itself in the citation wording: Text absolutely limited by plaque dimensions to 70 words; 60 is preferable for aesthetic reasons.

RenderMan® software revolutionized photorealistic rendering, significantly advancing the creation of 3D computer animation and visual effects. Starting in 1981, key inventions during its development at Lucasfilm and Pixar included shading languages, stochastic antialiasing, and simulation of motion blur, depth of field, and penumbras. RenderMan®’s broad film industry adoption following its 1988 introduction led to numerous Oscar®s for Best Visual Effects, and an Academy Award of Merit Oscar® for its developers.

Discussion of the citation's 1st sentence:

While RenderMan® was first created for rendering visual effects particularly for the motion picture industry, its wider impact has grown to include other industries such as design, advertising, and architecture.

Discussion of the citation's 2nd sentence:

In 1986, the graphics group at Lucasfilm was spun out as a separate company called Pixar.

Four of the five techniques in this sentence were amongst the inventions as set forth in claims of the US 4,897,806 patent. The fifth technique is "shading languages," which includes the RenderMan® Shading Language (RSL) invention to enable shaders to be easily defined in software.

Discussion of the citation's 3rd sentence:

The Academy of Motion Picture Arts and Sciences (AMPAS) Award of Merit is an Oscar® which is "reserved for achievements that have changed the course of filmmaking" (see https://www.Oscars.org/sci-tech/academy-award-merit). In 2000, the Award of Merit Oscar® was presented to Rob Cook, Loren Carpenter, and Ed Catmull "for their significant advancements to the field of motion picture rendering exemplified in Pixar’s RenderMan®. Their broad professional influence in the industry continues to inspire and contribute to the advancement of computer-generated imagery for motion pictures."

The AMPAS presents an annual Best Visual Effects Oscar®. The Best Visual Effects Oscar® films which used RenderMan® (1991-2019) section below lists 26 films which won this Oscar® during this 29-year period whose visual effects were created at least in part using RenderMan®.

Supplementary Note 1: In addition to its Oscar®s, the AMPAS gives awards that recognize achievements related to technical and engineering advances. Amongst these is its Scientific and Engineering Award plaque which is presented "for achievements that produce a definite influence on the advancement of the motion picture” (see https://www.Oscars.org/sci-tech/scientific-engineering). In 1992, the AMPAS recognized RenderMan® with a Scientific and Engineering Award plaque which was presented "to Loren Carpenter, Rob Cook, Ed Catmull, Tom Porter, Pat Hanrahan, Tony Apodaca, and Darwyn Peachey for the development of 'RenderMan' software which produces images used in motion pictures from 3D computer descriptions of shape and appearance."

Supplementary Note 2: The ® designations will not appear on the face of the bronze plaque, but they will remain on the Milestone's webpage.

200-250 word abstract describing the significance of the technical achievement being proposed, the person(s) involved, historical context, humanitarian and social impact, as well as any possible controversies the advocate might need to review.

IEEE technical societies and technical councils within whose fields of interest the Milestone proposal resides.

In what IEEE section(s) does it reside?

Oakland-East Bay Section

IEEE Organizational Unit(s) which have agreed to sponsor the Milestone:

IEEE Organizational Unit(s) paying for milestone plaque(s):

Unit: Oakland East-Bay Section

Senior Officer Name: Sarah Mings

IEEE Organizational Unit(s) arranging the dedication ceremony:

Unit: Oakland-East Bay Section

Senior Officer Name: Sarah Mings

IEEE section(s) monitoring the plaque(s):

IEEE Section: Oakland-East Bay Section

IEEE Section Chair name: Sarah Mings

Milestone proposer(s):

Proposer name: Brian A. Berg

Proposer email: Proposer's email masked to public

Please note: your email address and contact information will be masked on the website for privacy reasons. Only IEEE History Center Staff will be able to view the email address.

Street address(es) and GPS coordinates in decimal form of the intended milestone plaque site(s):

1200 Park Ave., Emeryville, CA 94608 USA; Latitude 37.8326714, Longitude -122.2836945

Describe briefly the intended site(s) of the milestone plaque(s). The intended site(s) must have a direct connection with the achievement (e.g. where developed, invented, tested, demonstrated, installed, or operated, etc.). A museum where a device or example of the technology is displayed, or the university where the inventor studied, are not, in themselves, sufficient connection for a milestone plaque.

Please give the details of the mounting, i.e. on the outside of the building, in the ground floor entrance hall, on a plinth on the grounds, etc. If visitors to the plaque site will need to go through security, or make an appointment, please give the contact information visitors will need. 3 brick columns support the letters of "PIXAR" at the entry to the HQ of Pixar Animation Studios, and this plaque will be mounted on the rightmost of these 3 columns. Access is 24/7.

Are the original buildings extant?

Yes. In the 1980s, RenderMan® development work was largely performed at a Lucasfilm satellite office at 3210 Kerner Blvd., Bldg. C, San Rafael CA 94901. A false business name of Kerner Optical Co. was posted at the entry to ensure the discreteness of the work taking place inside.

Details of the plaque mounting:

At the entrance to the Pixar Animation Studios campus.

How is the site protected/secured, and in what ways is it accessible to the public?

There is 24/7 access to the rightmost brick column at the entry to Pixar Animation Studios, but the public is not allowed access to the company campus.

Who is the present owner of the site(s)?

Pixar Animation Studios

What is the historical significance of the work (its technological, scientific, or social importance)? If personal names are included in citation, include detailed support at the end of this section preceded by "Justification for Inclusion of Name(s)". (see section 6 of Milestone Guidelines)

The RenderMan® product changed not just the visual effects industry, but the film industry as a whole. Within three years of its 1988 product release, computer graphics became the norm in the visual effects industry, and RenderMan® became the dominant rendering tool in the film industry. As of 2022, RenderMan® had been used in over 500 motion pictures, including 15 winners of an Animation Feature Oscar®, and 98 nominees and 26 winners of a Visual Effects Oscar®. For 17 years in a row (1996-2012), RenderMan® was used by every film that won a Visual Effects Oscar®, as well as all of the nominees (see https://AwardsDatabase.Oscars.org/). For the years 1990-2021, RenderMan® was used by:

• 84% of Best Visual Effects Oscar® winners (26 of 31)

• 83% of Best Visual Effects Oscar® nominees (96 of 116)

• 71% of Best Animated Feature Oscar® winners (15 of 21)

The effects became so good that even people who created them for a living couldn't always tell what was an effect and what wasn't. Visual effects moved from being a specialty used mainly for science fiction films to being a regular tool in the filmmaker's repertoire. With the 1994 release of Forrest Gump (for which it received the Best Visual Effects Oscar®), RenderMan®'s use in mainstream movies reached the point of making it non-obvious as to which portions of a movie were created using computer-generated imagery (CGI). In the years since that time, RenderMan® has also moved into other industries including design, advertising, and architecture.

Many of the technical advances in rendering developed at Lucasfilm/Pixar during the 1980s endure to this day:

• Micropolygons

• Physically-based reflection and lighting

• Stochastic sampling

• Motion blur

• Depth of field

• Penumbras

• Blurry reflection/refractions

• Monte Carlo techniques

• Shading languages

These advances have had an extraordinary impact on the field of computer graphics, and have led to many further developments in both industry and academia. A large number of products, papers, and Ph.D. theses have built on this work.

Films and images created using these advances at Lucasfilm/Pixar from 1981-1988 include the following (and note that Reyes evolved into RenderMan® in 1988):

• The Road to Point Reyes (July 1983): The first published image that was created using a shading language.

• 1984 (July 1984): The first ray-traced image to use Monte Carlo techniques, specifically for motion blur and penumbras.

• The Adventures of André and Wally B. (July 1984): The first Reyes-animated short, and which also used stochastic sampling and motion blur.

• Young Sherlock Holmes (1985): The first feature-length film to include a Reyes-rendered visual effects sequence, and which also used depth of field. It was nominated for a Visual Effects Oscar®.

• Luxo Jr. (1986) The first film using Reyes to be nominated for a Best Animated Short Oscar®.

• Red's Dream (1987)

• Tin Toy (1988) The first film using RenderMan® to win a Best Animated Short Oscar®.

• The Abyss (1989). The first feature-length film using RenderMan® to win a Visual Effects Oscar®.

• Knick Knack (1989)

Awards related to this work (chronological order):

1985 ACM SIGGRAPH Computer Graphics Achievement Award: Loren Carpenter

1987 ACM SIGGRAPH Computer Graphics Achievement Award: Rob Cook

1990 ACM SIGGRAPH Computer Graphics Achievement Award: Richard Shoup and Alvy Ray Smith

1992 Academy of Motion Picture Arts and Sciences (AMPAS) Scientific and Engineering Award: Loren Carpenter, Rob Cook, Ed Catmull, Tom Porter, Pat Hanrahan, Tony Apodaca, and Darwyn Peachey

1993 ACM SIGGRAPH Computer Graphics Achievement Award: Pat Hanrahan

1993 ACM SIGGRAPH Stephen Anson Coons lifetime achievement award: Ed Catmull

1995 ACM Fellow: Ed Catmull

1995 ACM Fellow: Loren Carpenter

1996 AMPAS Special Achievement Award: Toy Story

1999 ACM Fellow: Rob Cook

1999 National Academy of Engineering induction: Pat Hanrahan

2000 ACM SIGGRAPH Computer Graphics Achievement Award: David Salesin

2000 AMPAS Award of Merit (Oscar®): Rob Cook, Loren Carpenter, and Ed Catmull "for their significant advancements to the field of motion picture rendering exemplified in Pixar’s RenderMan®"

2000 National Academy of Engineering induction: Ed Catmull

2001 AMPAS induction of Rob Cook

2002 ACM Fellow: David Salesin

2003 ACM SIGGRAPH Stephen Anson Coons lifetime achievement award: Pat Hanrahan

2006 National Academy of Engineering induction: Alvy Ray Smith

2006 IEEE John Von Neumann Medal: Ed Catmull

2008 AMPAS Gordon E. Sawyer Award: Ed Catmull

2008 ACM Fellow: Pat Hanrahan

2009 ACM SIGGRAPH Stephen Anson Coons lifetime achievement award: Rob Cook

2010 National Academy of Engineering induction: Rob Cook

2018 ACM SIGGRAPH Academy induction: Loren Carpenter, Ed Catmull, Rob Cook, Pat Hanrahan, Alvy Ray Smith, and David Salesin

2019 ACM Turing Award: Ed Catmull and Pat Hanrahan

Best Visual Effects Oscar® films which used RenderMan® (1991-2019) (see https://RenderMan.Pixar.com/movies and https://AwardsDatabase.Oscars.org/):

1991 Terminator II

1992 Death Becomes Her

1993 Jurassic Park

1994 Forrest Gump

1996 Independence Day

1997 Titanic

1998 What Dreams May Come

1999 The Matrix

2000 Gladiator

2001 The Lord of the Rings: The Fellowship of the Ring

2002 The Lord of the Rings: The Two Towers

2003 The Lord of the Rings: Return of the King

2004 Spider-Man 2

2005 The Lion, The Witch and The Wardrobe

2006 Pirates of the Caribbean: Dead Man's Chest

2007 The Golden Compass

2008 The Curious Case of Benjamin Button

2009 Avatar

2010 Inception

2011 Harry Potter and the Deathly Hallows, Part 2

2012 Life of Pi

2014 Interstellar

2015 Ex Machina

2016 The Jungle Book

2017 Blade Runner 2049

2019 1917

The RenderMan® Interface Specification (RISpec) is an open API technical specification developed by Pixar Animation Studios for a standard communications protocol between 3D computer graphics programs and rendering programs to describe three-dimensional scenes, and to turn them into digital photorealistic images.

The RenderMan® Shading Language (RSL) is a component of the RISpec which is used to define shaders. A shader is a computer program that calculates the appropriate levels of light, darkness, and color during the rendering of a 3D scene - a process known as shading. RSL source code uses a C-like syntax, and it can be used directly on any RenderMan®-compliant renderer such as DNA Research's 3Delight and Sitexgraphics' Air.

The 2019 ACM Turing Award webpage [1] recognized Edwin E. (Ed) Catmull and Patrick M. (Pat) Hanrahan for their lifetime achievements, including their contributions to RenderMan®.

What obstacles (technical, political, geographic) needed to be overcome?

Numerous technical obstacles needed to be overcome in 1980 as computer graphics (CG) rendering was still fairly new, although it had already made progress on at least these fronts in the years leading up to 1980:

• projecting the shape of virtual objects onto the screen (the mathematics of perspective transformations)

• determining which surfaces were visible vs. which ones were occluded by other objects

• applying patterns (called textures) to those visible surfaces

• computing hard-edged shadows from point-light sources

• illuminating surfaces with basic shading and highlights

• computing shadows and mirror reflections using ray tracing

• creating curved surface shapes using bicubic patches

• creating complex surface shapes using recursive fractal subdivision

1980: 9 Key Technical Obstacles for Computer Graphics in Film

The goal at Lucasfilm was to make images suitable for demanding applications like film, but the state of the art in 1980 fell drastically short of this goal. Attaining it would require tackling several issues which no one had previously addressed in a useful way, including the following 9 technical obstacles:

• Geometric complexity was very limited. Scenes generally contained only a few objects because of the limits of memory and computational speed. And the objects they did contain were usually very simple: mostly polygons, not a lot of small-scale nuance. State-of-the-art images might have as many as 40,000 polygons; what was needed was at least 2000 times that many.

• Surface appearances were rudimentary, making the images look unrealistic and simplistic, which was completely unsuitable for general use in film. They were mostly limited to either an artificial chalky look or an artificial plastic look.

• Aliasing (such as the visual stair-stepping of edges that occurs in an image when the resolution is too low) was an ever-present problem. Aliasing artifacts were egregious and pervasive despite numerous attempts to combat them, and they were particularly disturbing in moving objects (which is of course the norm in film). There are two possible approaches to dealing with this issue. One approach is analytic filtering of the geometric primitives: while this had been shown to eliminate aliasing, it was complex and computationally expensive. The other approach is point sampling, which was believed to have inherent aliasing that was theoretically impossible to eliminate: the sample density imposes a frequency limit (called the Nyquist limit), and features in the scene beyond that limit (such as small details) appear as artifacts under the “alias” of large-scale features.

• Motion blur. Physical cameras have a shutter that opens for a period of time during which objects can move, with the result being objects that are blurred along the line of their motion on each frame of film. Rendering a sequence of motion picture frames without motion blur is in effect point sampling the scene at regularly spaced intervals of time, with the result having prominent, crawling aliasing artifacts. When CG objects are blended into a live action scene (as is the case with visual effects), the motion blurs of the physical and virtual cameras have to match – otherwise, the CG objects can look pasted on instead of appearing to be part of the scene. In 1980, there was no viable method for blending live action on film with CG-generated objects so that motion blur did not present a problem.

• Depth of field. Depth of field is an essential part of film making. Without it, CG is limited to having every object in sharp focus. If a CG image does not have the same depth of field as the physical camera, it will look artificial and pasted on instead appearing to be part of the scene. The only known approach for doing this in 1980 was creating a CG image with a pin-hole lens, and then blurring parts of the resulting image after rendering. However, the result was a crude approximation with serious artifacts that made it unusable.

• Soft shadows. Previous shadows had hard edges, i.e., every piece of a surface was either completely lit or completely in-shadow. There were two challenges: the first was how to do this at all, and the second was how to create an approximation that would be computationally viable.

• Blurry reflections and refraction. Most shiny surfaces have reflections, but no one knew how to compute reflections and refractions off of surfaces that were not perfect mirrors.

• Complex surface appearances. Previous work on surface appearance was limited to a combination of a simple reflection model and the ability to map textures onto surfaces. No one had created a way to describe surfaces with the kind of complex appearance characteristics of most real-world objects.

• Artistic control. There was no general way to provide artists with a way to design light characteristics and a surface appearance that had enough control to match objects in live action footage, and with enough flexibility to create the kinds of non-physical appearances that might be used in animation or visual effects.

• Combinations of all of these. The solutions to these problems couldn’t exist in isolation. They all had to be able to interact without limitations. For example, there might be several complex, artistically designed surfaces that were moving, were out of focus, and which were in the penumbras of multiple moving and varying lights, and these surfaces had to be able to reflect and shadow each other while accounting for changes in motion, lighting, focus, etc., during the period that the virtual shutter was open.

In short, computer graphics had enormous obstacles to overcome in 1980. Some were due to the computational limitations of that time, others were ones that no one know how to address even with unlimited computation, and others still were considered theoretically impossible.

1980: Lucasfilm Attempts to Overcome the 9 Key Technical Obstacles for Computer Graphics in Film

Achieving the goal of making film-quality photorealistic images of complex scenes required overcoming all 9 of these obstacles. The approach taken was to start with developing a thorough understanding of the issues and the fundamentals, and only then to design algorithms that would come as close as possible to being actual solutions to these problems (and not just workarounds), even if those algorithms would require far more computational power to become practical. This approach required having a lot of faith in the longevity of Moore’s law at a time when 20 people were sharing a single VAX 11/780 running at 1 million instructions per second with 8 Mbytes of memory. For comparison, Apple's 2022 M2 chip is about 15.8 trillion times as fast, has correspondingly more memory and bandwidth, and uses less power, all at less than 1/200 of the cost. The cost per op in 2022 is about one quintillionth what it was in 1980.

Lucasfilm assembled the Lucasfilm Computer Division in 1980. Led by Ed Catmull, it was a small group with lots of work to do in many areas. Included was a Hardware team for building the specialized gear which was assumed to be required for whatever was designed. The Graphics team was led by Alvy Ray Smith. Loren Carpenter and Rob Cook were brought on in 1981 as the Rendering team, and other teams had other areas of focus. The Division operated in a very free-flowing and open environment in which everyone could (and did) kibbitz on all of the many issues that arose in this brave new endeavor.

The results exceeded everyone’s wildest dreams as the team was able to find solutions to all 9 of these technical obstacles. The result was the ability to create photorealistic images that were radically more complex and nuanced than anything that had come before.

For a small number of the obstacles, the solutions developed were ridiculously far beyond the computation limits of the time. However, approximations that were practical within the existing computational capacity were created which still met the quality requirements for film. Both the solutions and the practical approximations to them were important. Good approximations are only possible when they are built on a deep understanding of the problem space so that tradeoffs can be made without creating unexpected issues. An understanding of the actual solutions informed the approximations, and the approximations enabled the field to get going without waiting for 30+ years of Moore’s Law.

These approximations tended to become underappreciated after they were no longer needed, but it is only because of them that it was possible to make film-quality images during the decades that these approximations were used. Their limitations were fully understood at the time, and they were never intended to be more than a temporary measure until Moore’s Law caught up.

1983-1985: The Overall Architecture Was in Place, and Reyes Software was Used in Two Film Clips

By 1983, the overall architecture was in place, and all of the core algorithmic breakthroughs had been invented and/or developed. By 1984, the algorithms had been implemented in a software program (which was initially called Reyes, which stood for the team’s aspiration to “Render Everything You Ever Saw”). That program was immediately used in production for an animated short (1984’s The Adventures of André and Wally B.) and for visual effects mixed with live action in a feature-length film (in a segment of 1985’s Young Sherlock Holmes).

1986: The Reyes Software Was Fast Enough Without Specialized Hardware

Around 1986, a turning point occurred when it became clear to everyone’s surprise that the software was fast enough to be viable without specialized hardware. Being able to render without specialized hardware had the tremendous advantage of being able ride the Moore’s Law wave as new computers were built instead of having to chase it and having to constantly update custom-made specialized hardware. Not requiring specialized hardware also opened up the possibility that the renderer could be sold to others as a software product, something no one had expected.

1988: Reyes Was Modified into RenderMan®, Which Was Offered As an Industry Product

Up until this point, the Reyes renderer had received scene information from the internal modeling / animation software using a custom-built interface that had been written in-house. What was needed now was to replace this interface used in Reyes with a public standard which could be used by any program to send scene descriptions to any renderer. The new interface was not to be limited to what was computationally feasible at the time so that it could support any capability that a high-end renderer might have. In 1988, Reyes was modified to support the new interface, renamed RenderMan®, and offered for sale.

The combination of planning for the far future but building for the present day produced the conflicting pulls that led to the development of a product with capabilities way ahead of its time, yet immediately practical and usable. The real-world production rendering experience that this provided immediately started guiding its further maturity. This approach worked because the compromises had all been made intentionally with the long-term vision in mind. Both the code and the interface were elegantly designed and carefully implemented to support a product built so that it could continue to grow and adapt for many years.

The Concession re: Ray Tracing

The one major concession that had been needed was to initially not support ray tracing. The reasoning behind this choice proved to be wise for the following reasons:

• To have any hope of handling complex scenes, rendering speed had to grow linearly with scene complexity, and ray tracing was inherently O(nlog(n)). Ray tracing and similar effects were only able to be fully integrated into the RenderMan® software in the 2010s.

• Ray tracing effects were either unimportant or of limited importance in most scenes in most movies; the one exception was soft shadows, which needed a viable workaround.

• For the occasional situation that absolutely required ray tracing effects (e.g., the glass bottles in one scene in A Bug's Life), a separate ray tracing program could be used to do those computations.

This concession to not support ray tracing led to two developments that were known to be temporary workarounds, but that made it possible to make film-quality images during the 30 years of Moore’s Law that it took to make this concession unnecessary:

• Reyes architecture. The architecture was built around a recursive bound/bucket-sort/split process in which rendering times scaled linearly with geometric complexity, and which was the key to being able to handle the amount of detail needed for film-quality scenes – this only became unnecessary in the mid 2010s as computers became powerful enough to obviate it.

• Percentage closer filtering. A shadow map algorithm called percentage closer filtering was created that approximated the look of soft shadows. and which allowed artistic control over the shadow softness – while it was indeed a hack, it was a useful one that remained essential until the mid 2010s.

What features set this work apart from similar achievements?

The development of RenderMan® included the creation of new algorithms, designs, and technologies, the most significant of which are discussed in this section. While these creations are credited to their main contributors, the frequent discussions amongst the team members allowed many others to play a part as well:

• Micropolygons. Every geometric primitive is turned into small polygons called micropolygons (in RenderMan®, these were quadrilaterals at the Nyquist limit, about 1/2 pixel on a side). Rendering operations after this initial step are performed on the micropolygons. This approach unifies and simplifies the calculations and thus enables small and fast code that is amenable to hardware optimization. [1981: Loren Carpenter]

• Reyes architecture. This was the key to handling extremely large amounts of geometric complexity. The screen is divided into buckets (initially they were 4x4 pixels), a bounding box is calculated for each geometric primitive in the scene, and each geometric primitive is placed in the bucket of the upper left corner of its bounding box. Then the buckets are processed in raster-scan order. If a primitive in the bucket is too large to render, it is split and the resulting pieces are bound and re-bucketed; otherwise, the primitive is diced it into a grid of micropolygons and the grid is shaded before the visibility of its micropolygons is determined. Shading before hiding was counterintuitive at the time because it shades points that are not visible, but overall there's a dramatic gain in efficiency from shading batches of points on the same surface at the same time - this also enabled new capabilities like displacement maps (see below). Each shaded micropolygon is then placed in each bucket that it overlaps. Once a bucket contains all of its micropolygons, the visibility of those micropolygons is determined, and the visible points are filtered to compute the pixels. This approach made most of the rendering computations amenable to hardware implementation, but it also proved to be a good approach for its initial software implementation. This method was used for more than 30 years until Moore's law obviated the need for it by making it economically viable to ray trace complex scenes. [1981: Loren Carpenter]

• Physically-based lighting and reflection. RenderMan® used the first illumination and reflection equations for rendering based on the physics of light, enabling it to accurately model light-surface interaction and create images that had materials with a realistic appearance. It calculated illumination using energy transfer, permitted non-white specular highlights, and rendered surface color based on the energy spectrum of the incident light, the reflectance spectrum of the surface, and the spectral response of photoreceptors in the eye. [1981: Rob Cook and Ken Torrance developed the model at Cornell. Rob Cook incorporated it into RenderMan®.]

• Stochastic sampling. There had been earlier applications of stochastic sampling, but a critical breakthrough led to 3 patents (listed below) whose inventions solved the aliasing problem that had plagued point sampling. The key was determining the sample locations stochastically instead of putting them in grid. The energy in frequencies in the scene greater than the Nyquist limit cannot be accurately represented, nor can they be eliminated. However, using this approach meant that that this energy appears as noise instead of aliasing. Initially this noise was very noticeable and was itself an unacceptable artifact, but it was discovered that using a blue noise sampling pattern greatly reduced the noise and made the approach viable. It was also discovered that rod cells in the eye are also in a blue noise pattern, so a human's visual system is experienced at tuning out this particular type of noise. [1982-1983: Rodney Stock had the initial idea. Rob Cook developed the idea into an algorithm and code, and he also discovered the blue noise pattern needed to make it viable. Alvy Ray Smith found the rod cell research. 1985: a patent application was filed which led to 3 U.S. patents]

• Motion blur. By assigning each point sample a random time between when the shutter opened and closed, motion blur was able to be accurately simulated. Those random times were assigned using blue noise to keep the visible noise level low. For each sampling point, the vertices of each micropolygon that might overlap it during the frame had to be moved to their positions at that sample's assigned moment in time. Because this bounding was in the innermost rendering loop, it had to be done quickly. Making this work in a reasonable amount of time required care in tracking the moving micropolygon bounds positions efficiently, and updating the micropolygon positions. [1983: Tom Porter realized stochastic sampling could be extended to solve motion blur. Rob Cook incorporated this idea into RenderMan®, while Tom Porter added it to Tom Duff’s quadrics ray tracer to create the image titled "1984."]

• Depth of field. By assigning each point sample a random position on the lens, depth of field could be accurately simulated. For each sampling point, each micropolygon that might overlap it during the frame had to be moved to its position on the screen as seen from that exact lens position. Because this process was in the innermost rendering loop, making it fast enough required a great deal of care in tracking the moving micropolygon bounds positions efficiently, and then updating the micropolygon positions. [1983: Rob Cook]

• Soft shadows (done the right way, though impractical for general scenes in the 1980s). Shadows are computed by tracing a "shadow ray" from each visible point on scene objects back to each light source. If the ray hits an object in between the two, the point is in shadow relative to that light - otherwise, it is lit. By assigning each shadow ray to a random position on each light, and by moving the object and the lights to the correct positions for the motion-blur time and the depth-of-field lens position of the initial ray from the lens, the percent of each light that's visible from that point on the object is determined. [1983: Rob Cook.]

• Monte Carlo rendering Many of the advances in this section (stochastic sampling, motion blur, depth of field, soft shadows, soft shadows and reflections) use randomness in doing numerical integration, an approach called Monte Carlo integration. This approach was first developed by physicists in the late 1940s to simulate the diffusion of neutrons through fissile material, an application quite different from rendering and unknown to the Lucasfilm team at the time. They were the first to use Monte Carlo in rendering, and that had its own enduring impact on the field beyond the advances in this proposal as evidenced by its crucial role in significant rendering capabilities later developed by others (such as subsurface scattering and path tracing). [1983: Rob Cook]

• Percentage closer filtering (the imperfect but practical approximation to soft shadows). Even though penumbras only arise from area lights, this approximation treats lights as point sources. For each frame, create an image of the scene from each light, but store depth instead of color in the pixels. For each visible point in the scene, determine its position on each shadow map. If its distance from the light is greater than the distance stored in the shadow map, then it is in shadow - otherwise, it is lit. That's how standard shadow maps work. The innovation was to look not just at that single point on each shadow map, but instead look at a region around that point and determine the percentage of the shadow map samples in that region that were closer than the visible point. The size of that region changed from small (near the visible point) to large (near the light), and was under artistic control. This worked reasonably well in most cases, although it took some manual adjustment. This algorithm had two effects: (1) it antialiased the shadows, and (2) it made them soft. This method lasted more than 30 years until Moore's law obviated the need for it. [1985: Rob Cook invented the algorithm. David Salesin implemented it. Bill Reeves integrated it into the modeling system.]

• Blurry reflections and refraction. Reflection and refraction ray directions are computed with a distribution that matches the reflection or refraction function of the surface. Because the Reyes architecture traded off secondary rays for geometric complexity, Pixar had to render these and other ray tracing effects using specialized renderers for more than 30 years until Moore’s Law finally allowed them to be incorporated into RenderMan® itself. [1983: Rob Cook]

• Complex surface appearances & artistic control. Development of the first shading language, which was the key to representing arbitrarily complex surface appearances and enabling artistic control of appearance. [1982: Rob Cook invented the idea of shading languages, and designed and implemented the first language - but it did not support the recursion needed for ray tracing and other effects not implemented in the Reyes architecture. 1987-1988: Pat Hanrahan and Jim Lawson designed and implemented a new shading language as part of the RenderMan® Interface Specification. This was a great improvement in numerous ways and included the recursion needed for ray tracing and other effects.]

• Displacement maps. Texture maps had previously been used to store surface normals, which could be used to create the appearance of surface bumps (and hence their "bump maps" name), although at close distances the flatness of these apparent bumps became obvious. However, because Reyes did shading before visibility, textures could be used to move the actual surface locations. This started as an artistic tool for adding small-scale geometric detail by painting instead of modeling, but it also had the advantage of adding detail without increasing geometric complexity. [1982: Rob Cook]

• Software implementation. A new rendering program implemented the Reyes architecture and all of above breakthroughs and approximations. The code was written to be robust enough to support any scene that artists could throw at it, and flexible to support future extensions and innovations. Originally called Reyes and later renamed RenderMan®, it is still in use today, though it has evolved continuously over time with both minor and major revisions by several people. [1983: Rob Cook wrote the initial version]

• RenderMan® Interface Specification. This was an industry-standard rendering interface, and replaced the in-house interface between the modeling/animation system and the rendering software. It was designed for the entire suite of rendering capabilities, not just those implemented in the Reyes architecture. [1988: Pat Hanrahan wrote the interface. Pat and Jim Lawson modified the Reyes renderer to use it.]

Why was the achievement successful and impactful?

Supporting texts and citations to establish the dates, location, and importance of the achievement: Minimum of five (5), but as many as needed to support the milestone, such as patents, contemporary newspaper articles, journal articles, or chapters in scholarly books. 'Scholarly' is defined as peer-reviewed, with references, and published. You must supply the texts or excerpts themselves, not just the references. At least one of the references must be from a scholarly book or journal article. All supporting materials must be in English, or accompanied by an English translation.

Papers:

[1] Pioneers of Modern Computer Graphics Recognized with ACM A.M. Turing Award: Hanrahan and Catmull’s Innovations Paved the Way for Today’s 3-D Animated Films at https://awards.acm.org/about/2019-turing Media:1-Pioneers_of_Modern_Computer_Graphics_Recognized_with_ACM_A.M._Turing_Award.pdf

[2] Hanrahan, Pat (Princeton Univ.) and Lawson, Jim, (Pixar) - SIGGRAPH Proceedings 1990: A Language for Shading and Lighting Calculations at https://dl.acm.org/doi/pdf/10.1145/97879.97911 Media:2-97879.97911(A_Language_for_Shading_and_Lighting_Calculations).pdf

[3] Levoy, Marc and Hanrahan, Pat (both at Computer Science Dept., Stanford Univ.) - SIGGRAPH Proceedings 1996: Light Field Rendering at https://dl.acm.org/doi/10.1145/237170.237199 Media:3-237170.237199(Light_Field_Rendering).pdf

[4] An Animating Spirit, Communications of the ACM, June 2020 (A story about the 2019 A.M. Turing Award winners Catmull and Hanrahan)

[5] Attaining the Third Dimension, Communications of the ACM, June 2020 (A Q&A with the 2019 A.M. Turing Award winners Catmull and Hanrahan)

[6] Turing Award Press Release (18 March 2020) at https://awards.acm.org/binaries/content/assets/press-releases/2020/march/turing-award-2019.pdf Media:6-Press_Release_turing-award-2019.pdf

[7] The Design of RenderMan, Hanahan, Pat; Catmull, Edwin; IEEE Computer Graphics and Applications, July/August 2021

[8] Pixar's RenderMan turns 25, Seymour, Mike, 25 July 2013 Media:8-RenderMan-At-25.pdf

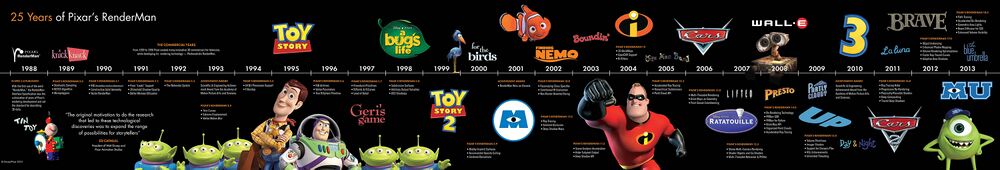

[9] 25 Years of Pixar's RenderMan (from Pixar's RenderMan turns 25 document)

[10] Cook, Robert L. and Torrance, Kenneth E., A Reflectance Model for Computer Graphics, Computer Graphics, Vol. 15, No. 3, July 1981, pp. 293-301.

[11] Cook, Robert L., Porter, Thomas, and Carpenter, Loren, Distributed Ray Tracing, Computer Graphics, Vol. 18, No. 3, July 1984, pp. 137-145.

[12] Cook, Robert L., Shade Trees, Computer Graphics, Vol. 18, No. 3, July 1984, pp. 223-231.

[13] Cook, Robert L., Stochastic Sampling in Computer Graphics, ACM Transactions on Graphics, Vol. 5, No. 1, January 1986, pp. 51-72.

[14] Reeves, William T., Salesin, David H., and Cook, Robert L., Rendering Antialiased Shadows with Depth Maps, Computer Graphics, Vol. 21, No. 4, July 1987, pp. 283-291.

[15] Cook, Robert L., Carpenter, Loren, and Catmull, Edwin, The Reyes Image Rendering Architecture, Computer Graphics, Vol. 21, No. 4, July 1987, pp. 95-102.

Books:

• Upstill, Steve, The RenderMan Companion: A Programmer's Guide to Realistic Computer Graphics, 1989

• Apodaca, Anthony; Gritz, Larry, Advanced RenderMan: Creating CGI for Motion Pictures, 1999

• Raghavachary, Saty, Rendering for Beginners: Image Synthesis Using RenderMan, 2004

• Stephenson, Ian, Essential RenderMan®, Springer, 2007

• Cortes, "Don" Rudy; Raghavachary, Saty, The RenderMan Shading Language Guide, 2007

• Armsden, Chris, The RenderMan Tutorial (Books 1-6), 2011

Patents Assigned to Pixar (regarding stochastic sampling):

[16] US Patent 4,897,806: "Pseudo-random point sampling techniques in computer graphics": Cook, Robert; Porter, Thomas; Carpenter, Loren; 19 June 1985 (filing date), 30 Jan. 1990 (issue date) Media:16-US4,897,806.pdf

US Patent 5,025,400 (issued on 18 June 1991) and US Patent 5,239,624 (issued on 24 Aug. 1993) were both based on the '806 patent specification

Supporting materials (supported formats: GIF, JPEG, PNG, PDF, DOC): All supporting materials must be in English, or if not in English, accompanied by an English translation. You must supply the texts or excerpts themselves, not just the references. For documents that are copyright-encumbered, or which you do not have rights to post, email the documents themselves to ieee-history@ieee.org. Please see the Milestone Program Guidelines for more information.

Please email a jpeg or PDF a letter in English, or with English translation, from the site owner(s) giving permission to place IEEE milestone plaque on the property, and a letter (or forwarded email) from the appropriate Section Chair supporting the Milestone application to ieee-history@ieee.org with the subject line "Attention: Milestone Administrator." Note that there are multiple texts of the letter depending on whether an IEEE organizational unit other than the section will be paying for the plaque(s).

Please recommend reviewers by emailing their names and email addresses to ieee-history@ieee.org. Please include the docket number and brief title of your proposal in the subject line of all emails.